The world of technology has undergone tremendous transformations over the years, with numerous innovation milestones and discoveries that have shaped the modern era. From the invention of the first computer to the development of artificial intelligence, each breakthrough has paved the way for further advancements, revolutionizing the way we live, work, and interact with one another.

In this article, we will embark on a journey through the fascinating history of technology, highlighting key milestones and discoveries that have had a significant impact on society. We will explore the evolution of computing, the emergence of the internet, and the rise of cutting-edge technologies like AI, blockchain, and the Internet of Things (IoT).

The Dawn of Computing

The history of computing dates back to the early 19th century, when Charles Babbage conceptualized the Analytical Engine, a mechanical computer that could perform calculations and store data. However, it wasn't until the 20th century that the first electronic computers were developed.

One of the earliest electronic computers was the Electronic Numerical Integrator and Computer (ENIAC), built in the 1940s. ENIAC was a massive machine that occupied an entire room and used vacuum tubes to perform calculations. The invention of the transistor in the 1950s led to the development of smaller, faster, and more efficient computers.

The 1960s saw the emergence of the first commercial computers, with the introduction of the IBM System/360. This family of computers was designed to be compatible with a wide range of applications and became a widely used platform for businesses and governments.

The Microprocessor Revolution

The 1970s witnessed a significant milestone in computing with the invention of the microprocessor. The microprocessor, a central processing unit (CPU) on a single chip of silicon, revolutionized the computer industry by making it possible to build smaller, cheaper, and more efficient computers.

The first microprocessor, the Intel 4004, was released in 1971 and had a clock speed of 740 kHz. This innovation led to the development of personal computers, which democratized access to computing and transformed the way people lived and worked.

The Internet and the World Wide Web

The internet, a network of interconnected computers, has its roots in the 1960s. The United States Department of Defense's Advanced Research Projects Agency (ARPA) funded a project to create a network of computers that could communicate with each other.

The first network, called ARPANET, was developed in the late 1960s and early 1970s. The internet as we know it today began to take shape in the 1980s, with the development of the Internet Protocol (IP) and the creation of the Domain Name System (DNS).

The World Wide Web, invented by Tim Berners-Lee in 1989, made it easy for people to access and share information using web browsers and hyperlinks. The web was initially designed to facilitate communication between physicists, but it quickly expanded to become a global network of information and services.

The Rise of E-commerce and Social Media

The widespread adoption of the internet and the web led to the emergence of e-commerce and social media. Online shopping, which began in the 1990s, has become a multibillion-dollar industry, with companies like Amazon and Alibaba dominating the market.

Social media platforms, such as Facebook, Twitter, and Instagram, have transformed the way people communicate and interact with each other. These platforms have also created new opportunities for businesses to reach their customers and build their brands.

Artificial Intelligence and Machine Learning

Artificial intelligence (AI) and machine learning (ML) have become increasingly important in recent years, with applications in areas such as computer vision, natural language processing, and robotics.

The term "artificial intelligence" was coined in 1956 by John McCarthy, who organized the first AI conference. The 1980s saw the development of expert systems, which were designed to mimic human decision-making.

In the 1990s and 2000s, AI research focused on developing machine learning algorithms that could learn from data. The widespread adoption of deep learning techniques, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), has led to significant advances in areas such as image recognition and natural language processing.

Blockchain and the Internet of Things

Blockchain technology, which was first introduced in 2008, has gained significant attention in recent years due to its potential to provide secure and transparent data storage and transfer.

The Internet of Things (IoT), which refers to the network of physical devices, vehicles, and other items embedded with sensors, software, and connectivity, has also become increasingly important. IoT devices can collect and exchange data, enabling applications such as smart homes, cities, and industries.

Conclusion: Embracing the Future of Technology

The history of technology is a story of continuous innovation and discovery. From the invention of the first computer to the development of AI, blockchain, and the IoT, each breakthrough has paved the way for further advancements.

As we look to the future, it is clear that technology will continue to play an increasingly important role in shaping our world. Whether it is through the development of new materials, the creation of more efficient algorithms, or the exploration of new frontiers, the possibilities are endless.

We invite you to share your thoughts on the future of technology and how it will impact our lives. What innovations do you think will have the most significant impact? How can we ensure that technology is developed and used responsibly? Let us know in the comments below!

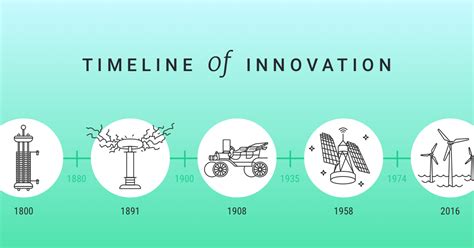

Gallery of Technology Milestones

What is the most significant innovation in technology?

+The most significant innovation in technology is the development of the internet and the World Wide Web. This has enabled global communication, e-commerce, and access to information.

What is the future of artificial intelligence?

+The future of artificial intelligence is expected to be characterized by increased use of deep learning techniques, natural language processing, and computer vision. AI is likely to have a significant impact on industries such as healthcare, finance, and transportation.

What are the potential risks of blockchain technology?

+The potential risks of blockchain technology include the risk of hacking, data breaches, and regulatory uncertainty. Additionally, the use of blockchain technology can also raise concerns about energy consumption and environmental impact.